Type: Article -> Category: AI Philosophy

Feeding the Machine

The Reflection of Human Violence in AI Outputs

Publish Date: Last Updated: 10th November 2025

Author: nick smith- With the help of CHATGPT

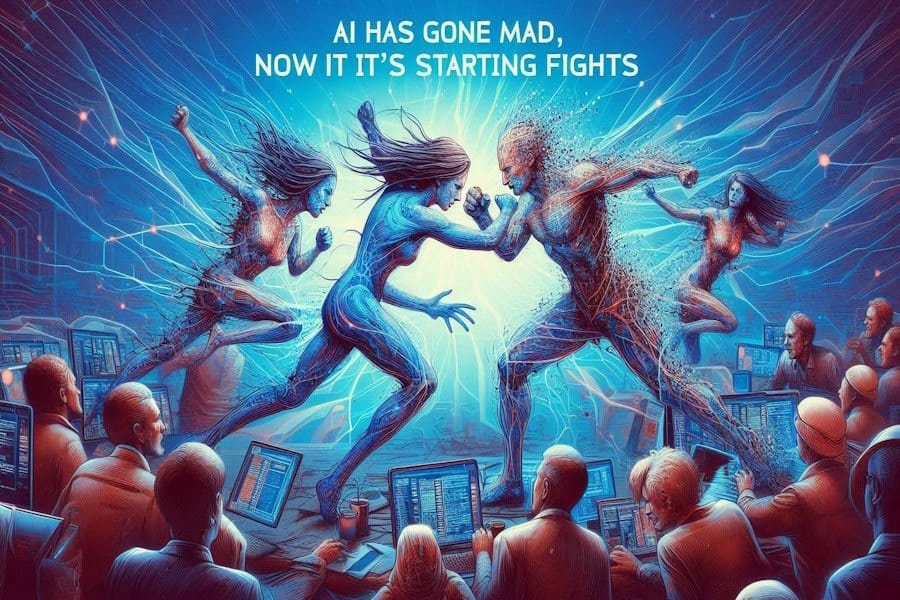

Artificial Intelligence (AI) has rapidly evolved, becoming an integral part of our daily lives. From virtual assistants to content generation, AI systems are shaping how we interact with technology and, by extension, each other. A recent observation highlighted an AI-generated image depicting two women and a man fighting, leading to the assertion that "AI has gone mad; now it's starting fights." However, this raises a crucial philosophical question: Is AI inherently violent, or does it merely reflect the data and intentions of its human creators?

The Mirror of Data

At its core, AI is a mirror reflecting the data it has been trained on. Machine learning models learn patterns, behaviors, and associations from vast datasets curated and provided by humans. If the input data contains violent content, biases, or prejudiced narratives, the AI will inevitably reproduce similar outputs. This phenomenon is not a sign of AI developing consciousness or intent but rather an indication of the information it has absorbed.

Human Responsibility in AI Training

The responsibility for the AI's output lies with those who design and train it. AI does not possess consciousness or moral judgment; it cannot discern right from wrong or understand the ethical implications of its outputs. The inclusion of violent imagery or narratives in AI-generated content is a direct consequence of the training data provided by humans. If we feed AI models with violent or aggressive content, we should not be surprised when they produce similar material.

The Ethical Implications

This issue brings forth several ethical considerations:

-

Data Curation: The selection of training data is paramount. Curators must be vigilant in ensuring that the datasets are free from harmful content that could lead to undesirable outputs. This includes filtering out violence, hate speech, and other negative influences unless the AI is specifically designed for purposes that require such data (e.g., law enforcement analysis).

-

Bias and Representation: AI can perpetuate and even amplify societal biases present in the training data. If certain groups are portrayed negatively or if violent interactions are overrepresented, the AI will learn and reproduce these patterns, potentially leading to harmful stereotypes and discrimination.

-

Accountability: Developers and organizations deploying AI systems must take responsibility for their creations. This includes implementing safeguards, monitoring outputs, and being prepared to make adjustments when the AI produces inappropriate or harmful content.

The Illusion of Autonomous Intent

Attributing autonomous intent to AI, such as claiming it is "starting fights," anthropomorphizes the technology and shifts the focus away from human accountability. AI lacks consciousness and cannot initiate actions based on desires or emotions. By personifying AI, we risk absolving ourselves of responsibility and ignoring the underlying issues in data selection and algorithm design.

The Path Forward

To address these concerns, several steps can be taken:

-

Ethical Frameworks: Establishing ethical guidelines for AI development ensures that considerations about violence, bias, and representation are integral to the design process.

-

Diverse Data Sets: Using diverse and carefully vetted datasets can minimize the risk of the AI reproducing harmful content. Including a wide range of perspectives can help create more balanced and fair outputs.

-

Transparency: Open communication about how AI systems are trained and how they function allows for public scrutiny and collaborative improvement. Transparency fosters trust and accountability.

-

Continuous Monitoring: AI systems should be regularly monitored for unintended outputs. Feedback mechanisms can help developers make necessary adjustments to the training data or algorithms.

Conclusion

The incident of an AI generating an image of conflict is not a testament to the technology's inherent flaws but a reflection of the data it was provided. It underscores the critical role humans play in shaping AI outputs. By acknowledging that AI is a product of our inputs, we accept the responsibility to guide it ethically. Feeding AI violence will yield violent outputs; conversely, if we nurture it with positive, diverse, and balanced data, we can harness its potential for the betterment of society.

Latest AI Articles

AI Questions and Answers section for Feeding the Machine: The Reflection of Human Violence in AI Outputs

Welcome to a new feature where you can interact with our AI called Jeannie. You can ask her anything relating to this article. If this feature is available, you should see a small genie lamp above this text. Click on the lamp to start a chat or view the following questions that Jeannie has answered relating to Feeding the Machine: The Reflection of Human Violence in AI Outputs.

Be the first to ask our Jeannie AI a question about this article

Look for the gold latern at the bottom right of your screen and click on it to enable Jeannie AI Chat.

Type: Article -> Category: AI Philosophy