Understanding AI Hallucinations

Risks, Solutions, and the Human Edge in Critical Systems

Publish Date: Last Updated: 10th November 2025

Author: nick smith- With the help of GROK3

View this article as a YouTube short.

What Are AI Hallucinations?

AI hallucinations occur when artificial intelligence systems generate incorrect or fabricated outputs, presenting them as factual or accurate. Unlike human hallucinations—sensory misperceptions like hearing voices or seeing objects that aren’t there—AI hallucinations manifest as confident but erroneous responses, such as inventing historical events, misinterpreting data, or generating plausible but false information. For example, a chatbot might claim a fictional person won an award, or an image recognition system might misidentify an object due to unfamiliar input.

The term "hallucination" in AI draws from its human counterpart, reflecting a parallel: both involve perceiving something that doesn’t align with reality. In AI, this happens when models, particularly large language models (LLMs) or generative AI, extrapolate beyond their training data, filling gaps with seemingly logical but incorrect outputs. This phenomenon is especially concerning in high-stakes applications like aviation, healthcare, or defense, where errors can have catastrophic consequences.

A Brief History of AI Hallucinations

The concept of AI hallucinations emerged as AI systems, particularly neural networks, grew more complex. Early AI, like rule-based systems in the 1960s and 1970s, followed strict logic and rarely "hallucinated" because their outputs were tightly constrained. However, the rise of machine learning in the 1980s and deep learning in the 2010s introduced models that learned patterns from vast datasets, enabling more flexible but error-prone behavior.

The term "hallucination" gained traction around 2015 with the advent of generative models like GANs (Generative Adversarial Networks) and early LLMs. Researchers noticed these models could produce convincing but false outputs—images, text, or predictions that deviated from reality. For instance, GANs generating realistic faces sometimes created nonexistent people, while early chatbots like Google’s Meena (2020) occasionally fabricated facts. By 2023, as models like ChatGPT and its successors became mainstream, "AI hallucination" became a widely recognized term, reflecting public and academic concern over reliability in AI-driven decisions.

The name "hallucination" stuck because it vividly captures the AI’s tendency to "see" or "assert" things that aren’t grounded in its data, much like a human experiencing a false perception. This analogy, while not perfect, highlights the challenge of ensuring AI outputs align with reality, especially in uncharted scenarios.

General AI vs. Specialized AI: A Tale of Hallucination Risks

Not all AI systems hallucinate equally. The frequency and impact of hallucinations vary significantly between general AI and specialized AI, driven by their design and purpose.

General AI: Broad but Prone to Errors

General AI, such as LLMs used in chatbots or virtual assistants, is designed for versatility, handling tasks from answering trivia to writing essays. These models are trained on vast, diverse datasets—think billions of web pages, books, and social media posts. While this enables flexibility, it also increases the risk of hallucinations, as the data may contain inconsistencies, gaps, or noise.

For example, if asked about a niche topic like a rare historical event, a general AI might piece together plausible but incorrect details, confidently presenting them as fact. Studies estimate that general-purpose LLMs hallucinate in 5-20% of responses for complex or knowledge-intensive tasks, particularly when data is sparse or ambiguous. This is because their probabilistic nature prioritizes coherence over strict accuracy, and their open-ended tasks allow room for creative errors.

Specialized AI: Focused and Reliable

Specialized AI, used in domains like aviation autopilots, medical diagnostics, or fraud detection, is built for specific tasks with curated, high-quality datasets. These systems operate within strict boundaries, reducing the chance of hallucinations. For instance, an autopilot relies on real-time sensor data (e.g., altitude, speed) and predefined rules, not creative extrapolation. Error rates in specialized systems can be as low as 1-2% in controlled settings, thanks to rigorous training and validation.

However, specialized AI isn’t immune to errors. If it encounters a scenario far outside its training—say, an unprecedented combination of failures in a plane—it may misinterpret data, though it’s less likely to "invent" solutions the way a general AI might. Instead, it typically defaults to safe modes or human intervention, as seen in modern aviation systems.

Redundancy: The Safety Net for Critical Systems

In high-stakes applications like aviation, redundancy is a cornerstone of mitigating AI hallucinations. Aircraft autopilots, for example, integrate multiple sensors (e.g., radar, GPS, inertial navigation) to cross-check data, ensuring no single point of failure leads to catastrophe. If an AI misinterprets one sensor’s input due to an untrained scenario, others can flag the discrepancy.

Consider a real-world example: the Boeing 737 MAX crashes in 2018 and 2019. The Maneuvering Characteristics Augmentation System (MCAS), a specialized AI-like system, misread faulty angle-of-attack sensor data as a stall, leading to incorrect control inputs. This wasn’t a hallucination in the creative sense but a failure to handle an untrained scenario. Post-incident, Boeing redesigned MCAS to incorporate redundant sensor inputs and stricter validation, highlighting how redundancy corrects for potential errors.

In military applications, like enemy detection systems, redundancy works similarly. AI might analyze radar, infrared, and visual data to identify threats. If one sensor produces anomalous data (e.g., mistaking a civilian plane for a threat), cross-checks with other sensors or human confirmation prevent rash decisions. These layers ensure that even in novel situations, the system doesn’t act on erroneous outputs.

What Happens in Untrained Scenarios? A Case Study

Imagine a commercial airplane encountering a never-before-seen scenario: a rare atmospheric phenomenon combined with a simultaneous failure of multiple systems (e.g., engine and hydraulics). How does the AI autopilot respond?

- Generalization Attempt: The autopilot tries to match the situation to its training, using sensor data to apply learned patterns. If the scenario is too novel, it might misinterpret inputs, like mistaking turbulence for a control issue.

- Uncertainty Handling: Advanced systems estimate confidence levels. If the AI detects low confidence (i.e., the scenario is unrecognizable), it triggers an error state or safe mode, such as maintaining stable flight.

- Fallback Mechanisms: The system hands control to human pilots via alerts, ensuring no speculative actions are taken. Redundant systems, like backup hydraulics or manual controls, provide additional safety.

- Outcome: Rather than “making it up,” the AI avoids risky improvisation, relying on predefined protocols or human intervention to navigate the unknown.

This approach contrasts with general AI, which might hallucinate a response in an open-ended context. Specialized systems, by design, prioritize safety over creativity, minimizing the risk of catastrophic errors.

Combating AI Hallucinations: Current Efforts

The AI community is actively tackling hallucinations through a multi-faceted approach, aiming to enhance reliability across both general and specialized systems:

-

Enhanced Training Data:

-

For specialized AI, developers prioritize high-quality, domain-specific datasets. For example, aviation autopilots are trained on extensive flight data, including rare edge cases, to minimize misinterpretations.

-

General AI benefits from efforts like data debiasing and integrating fact-checking mechanisms to reduce inconsistencies in broad datasets scraped from the web or public sources.

-

-

Uncertainty Quantification:

-

Advanced models are being designed to estimate and communicate uncertainty. If a general AI encounters an unfamiliar query, it might respond with “I’m unsure” or request clarification. In specialized systems, like autopilots, low-confidence outputs trigger safe modes or human alerts rather than speculative actions.

-

-

Adversarial Testing:

-

Developers stress-test AI with adversarial inputs—deliberately challenging or misleading data—to identify hallucination triggers. For instance, an autopilot might be tested against simulated sensor failures to ensure it responds correctly.

-

-

Human-in-the-Loop Systems:

-

Critical applications integrate human oversight as a fail-safe. In aviation, pilots monitor autopilot outputs and can override them instantly. Similarly, in medical diagnostics, doctors verify AI findings, ensuring errors don’t go unchecked.

-

-

Model Constraints and Techniques:

-

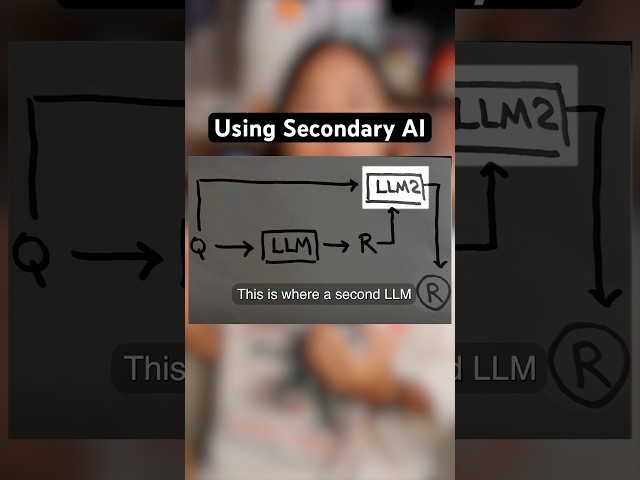

Specialized AI often uses deterministic algorithms or constrained models to limit creative outputs, reducing hallucination risks. For general AI, techniques like retrieval-augmented generation (RAG) anchor responses in verified sources, improving factual accuracy.

-

Regular model updates incorporate lessons from real-world errors, refining performance over time.

-

-

Regulatory Oversight:

-

Industries like aviation adhere to strict standards, such as the FAA’s DO-178C for software certification. These require extensive testing, redundancy, and documentation to ensure AI systems are robust against untrained scenarios.

-

While these efforts significantly reduce hallucinations, they don’t eliminate them entirely, especially in general AI where open-ended tasks invite more errors. Specialized systems, with their narrow focus and redundant safeguards, are better equipped to handle critical tasks reliably.

The Human Edge: Why Pilots and Experts Remain Essential

Even as AI advances, human expertise remains irreplaceable in high-stakes roles like piloting, particularly in those rare, “one-in-a-million” scenarios where AI encounters the unknown. Autopilots excel at routine tasks—maintaining stable flight, optimizing routes, or managing fuel—but they lack the human ability to adapt creatively to unprecedented situations. A pilot’s intuition, experience, and situational awareness are critical when data alone isn’t enough.

Take the 2009 “Miracle on the Hudson,” where Captain Chesley “Sully” Sullenberger landed a disabled Airbus A320 on the Hudson River after a bird strike caused dual engine failure. This scenario, untested in any training dataset, required split-second judgment and contextual understanding no AI could replicate at the time. While modern autopilots might stabilize a plane in such a case, only a human could weigh the risks of landing on water versus attempting a return to an airport.

This human edge ensures that pilots, surgeons, air traffic controllers, and other experts will remain vital for decades. AI is a powerful partner—handling repetitive tasks, processing vast data, and offering real-time insights—but humans provide the final layer of judgment when the unexpected arises. Regulatory bodies like the FAA mandate human oversight in aviation, reinforcing this partnership.

AI Hallucinations on YouTube

AI Hallucinations Explained: Why Smart Machines Still Make Dumb Mistakes #ainews #aihelp #usingai

YouTube Channel: aifun

What Is AI Hallucination? Surprising AI Fails!

YouTube Channel: TrickMeNot

How To Prevent AI Hallucinations #ai #hallucination #llm #tech #coding #developer

YouTube Channel: Jessica Wang

AI Chatbots Are “Hallucinating” Reality

YouTube Channel: Truthstream Media

Conclusion: A Future of Trust and Collaboration

AI hallucinations pose a challenge, but the gap between AI’s potential and its reliability is narrowing. General AI’s versatility comes with higher hallucination risks, while specialized AI’s focused design, backed by redundancy and human oversight, ensures safety in critical systems like aviation. Ongoing advancements—better data, uncertainty modeling, and rigorous testing—are making AI more trustworthy every day.

More great AI Articles

AI Questions and Answers section for Understanding AI Hallucinations:Risks, Solutions, and the Human Edge in Critical Systems

Welcome to a new feature where you can interact with our AI called Jeannie. You can ask her anything relating to this article. If this feature is available, you should see a small genie lamp above this text. Click on the lamp to start a chat or view the following questions that Jeannie has answered relating to Understanding AI Hallucinations:Risks, Solutions, and the Human Edge in Critical Systems.

Be the first to ask our Jeannie AI a question about this article

Look for the gold latern at the bottom right of your screen and click on it to enable Jeannie AI Chat.