How GPT Knows What to Say

A Deep Dive Into the Mind of the Machine

Publish Date: Last Updated: 10th November 2025

Author: nick smith- With the help of CHATGPT

Have you ever asked GPT a question and found yourself wondering: How does it know that? It’s like talking to someone who seems to have read the entire internet—without ever sleeping. But beneath the surface of this seemingly magical machine lies decades of development, a maze of algorithms, and a world built from tokens, transformers, and probabilities.

Let’s pull back the curtain. This article will take you on a journey through the evolution of GPT (Generative Pre-trained Transformer), explain how it actually works, and explore what the future of this astonishing technology might look like.

From Eliza to GPT: A Brief History of Conversational AI

The story of AI conversation didn’t start with ChatGPT—it began back in the 1960s with ELIZA, a simple program that mimicked a psychotherapist by rephrasing users' statements into questions. It was clever, but it didn’t actually understand anything.

Fast forward to the 2010s, and machine learning matured rapidly. In 2017, a breakthrough happened: Google introduced a new neural architecture called the Transformer. Unlike previous models that processed data in sequence, Transformers could analyze entire chunks of text at once, thanks to a mechanism called self-attention.

OpenAI took that architecture and pushed it to new levels. Starting with GPT-1, a modest model with 117 million parameters, they kept scaling up:

-

GPT-2 (2019): 1.5 billion parameters. Suddenly, the outputs were shockingly fluent—and controversial. OpenAI initially withheld it, fearing misuse.

-

GPT-3 (2020): 175 billion parameters. This was the model that put generative AI on the public map.

-

ChatGPT (2022): A chat-optimized version of GPT-3.5, fine-tuned to be more helpful, safer, and interactive.

-

GPT-4 (2023): Even more powerful, with multimodal capabilities—able to interpret images and text.

-

GPT-4o (2024): Real-time reasoning, voice input, and faster response times across modalities.

But what actually happens when you ask GPT a question?

Under the Hood: How GPT Understands and Responds

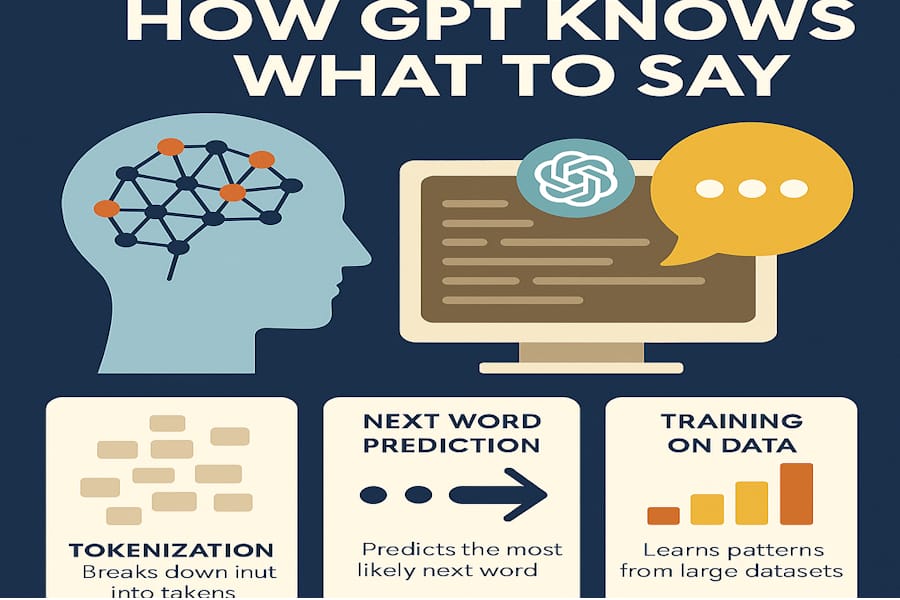

Step 1: Tokenization – Turning Words Into Numbers

GPT doesn’t read words like we do. It breaks down your sentence into tokens—chunks of text that could be words, subwords, or even characters. For instance, “artificial intelligence” might become [art, ificial, intelligence].

These tokens are mapped to numbers and passed into the model.

Step 2: Predicting the Future – One Token at a Time

GPT’s primary task is deceptively simple: predict the next token.

When you input a prompt like “The sun sets over the…”, the model calculates probabilities for thousands of possible next tokens. Maybe “horizon” is most likely, followed by “ocean” or “mountains”. It picks one (often the most probable, sometimes one of many plausible options), adds it to the sequence, and repeats.

This is how GPT “writes”—one token at a time, hundreds or thousands of times per reply.

Step 3: Transformers and Self-Attention – Making Sense of Context

The model uses a Transformer architecture to understand the full context of your prompt. It’s not just looking at the last word—it’s weighing the importance of all the words in the input, thanks to a mechanism called self-attention.

For example, in the sentence “The chicken didn’t cross the road because it was too tired,” GPT uses self-attention to figure out what “it” refers to. This helps with disambiguation and understanding complex instructions.

How GPT Learned to Speak Our Language

GPT was trained on a staggeringly large and diverse dataset—think websites, books, academic articles, dialogue transcripts, and more. The training happens in two phases:

-

Pre-training: The model reads billions of words and learns to guess the next token. This is where it picks up grammar, facts, logic, and general world knowledge.

-

Fine-tuning: Human reviewers help refine the model’s outputs. They use a method called Reinforcement Learning from Human Feedback (RLHF) to guide the model toward helpful and safe responses.

This combination of unsupervised learning and human correction gives GPT its conversational polish.

Why Doesn’t GPT Always Say the Same Thing?

Even if you input the same prompt twice, GPT might give you different answers. That’s because its predictions are probabilistic. Instead of always choosing the most likely token, it can sample from several top options to add variation and creativity.

This randomness is controlled by settings like temperature (how adventurous it gets) and top-k/top-p sampling (which limits how many options it considers).

This is why sometimes GPT feels formal, sometimes poetic, and occasionally a bit quirky.

Limitations and Hallucinations: Why GPT Isn’t a Human

Let’s be clear: GPT doesn’t understand the world—it doesn’t have beliefs, consciousness, or emotions.

It’s a statistical pattern-matcher, not a thinker. That’s why it can make mistakes or invent facts (known as “hallucinations”). If something is statistically likely but factually incorrect, GPT might still say it.

It also inherits biases from its training data. If certain views, styles, or stereotypes dominate its dataset, that can subtly shape its replies.

What the Future Holds

We’re only at the beginning of this new AI era. Here’s what’s on the horizon:

-

Multimodal models: GPT-4o already handles text, images, and sound. Future models will merge these more seamlessly.

-

Agentic behavior: AI that not only chats, but takes action—like booking appointments or running workflows autonomously.

-

Personalized GPTs: Custom AI assistants trained on your data and style.

-

On-device models: Smaller, faster GPTs running locally on your phone or laptop—no internet required.

-

AI + Human collaboration: From coding to art to education, the future isn’t AI replacing us, but working with us.

Final Thoughts: Predict, Don’t Pretend

GPT doesn’t think. It predicts.

It doesn’t dream, feel, or “know” in the human sense. It’s a mirror, trained on our words, and filtered through math.

But what a mirror it is.

Used wisely, GPT can augment our thinking, spark ideas, and help us navigate information more effectively. Just don’t confuse it for a mind—it’s not one.

And perhaps, understanding that makes GPT even more impressive—not less.

More AI Articles

AI Questions and Answers section for How GPT Knows What to Say

Welcome to a new feature where you can interact with our AI called Jeannie. You can ask her anything relating to this article. If this feature is available, you should see a small genie lamp above this text. Click on the lamp to start a chat or view the following questions that Jeannie has answered relating to How GPT Knows What to Say.

Be the first to ask our Jeannie AI a question about this article

Look for the gold latern at the bottom right of your screen and click on it to enable Jeannie AI Chat.