Type: Article -> Category: AI What Is

What are AI Weights?

The Backbone of Machine Learning Models

Publish Date: Last Updated: 14th January 2026

Author: nick smith- With the help of CHATGPT

Introduction to AI Weights

At the heart of every artificial intelligence (AI) and machine learning (ML) model lies a hidden structure of numbers known as weights. These values may be invisible to end users, but they are the key drivers of how models “think,” learn, and make predictions. Weights decide how strongly each input feature influences the output, shaping everything from a chatbot’s ability to understand your words to a medical AI’s capacity to detect early signs of disease.

Understanding AI weights is not only essential for researchers and engineers, but also for businesses and policymakers who rely on AI systems to make critical decisions. By exploring what weights are, how they work, and the challenges involved in optimizing them, we can better grasp why they are often described as the backbone of machine learning.

What Are AI Weights?

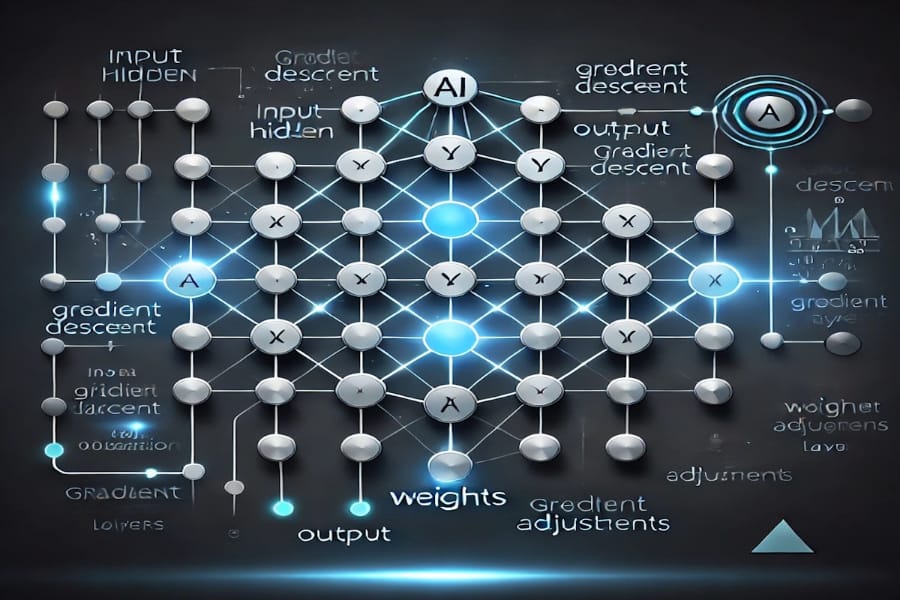

In the simplest terms, AI weights are numerical values that define the strength of connections within a neural network.

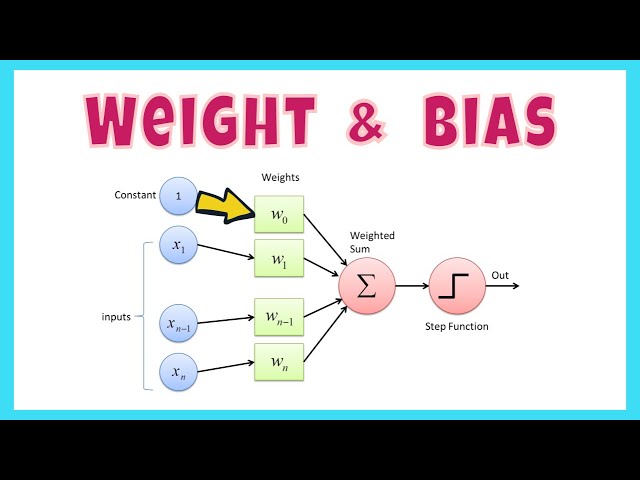

- In a neural network, each neuron (or node) receives inputs, multiplies them by associated weights, adds a bias term, and applies an activation function to produce an output.

- These weights are continuously adjusted during training, enabling the network to learn patterns and relationships hidden in the data.

Mathematically, this process can be expressed as:

Output = Activation(Σ (Input × Weight) + Bias)

Here, the weights act as multipliers, amplifying or dampening the influence of each input on the final decision. Over time, as the model trains on data, the weights shift closer to values that minimize errors and maximize predictive accuracy.

Why Weights Matter: Their Role in Model Outcomes

The way weights are configured has a direct impact on the performance and reliability of AI systems:

- Prediction Accuracy

- Well-adjusted weights allow models to generalize to new, unseen data.

- Poorly tuned weights can lead to overfitting (memorizing training data) or underfitting (failing to learn meaningful patterns).

- Feature Importance

- Weights indicate which inputs matter most. For instance, in predicting house prices, a high weight for “location” signals its strong influence on the outcome.

- Neural Activation

- Weights control how neurons activate in hidden layers, shaping the decision-making pathways of the network.

- Model Stability

- Extremely large or imbalanced weights can destabilize learning, leading to erratic predictions.

- Training Efficiency

- Smart weight initialization accelerates convergence, allowing models to learn faster and with fewer computational resources.

How AI Weights Are Adjusted

Weights are not fixed—they are optimized through training. This happens via an iterative process:

- Forward Propagation

Inputs are passed through the network, multiplied by weights, and transformed into predictions. - Loss Calculation

The difference between the prediction and the actual outcome is measured using a loss function. - Backpropagation

The model calculates the gradient (slope) of the loss with respect to each weight. - Weight Update

Using algorithms like gradient descent, weights are nudged in the direction that reduces error. - Iteration

This cycle repeats thousands—or even millions—of times until the model reaches optimal performance.

Challenges in Optimizing Weights

Despite being central to machine learning, weight optimization is not always straightforward. Key challenges include:

- Vanishing and Exploding Gradients

In deep networks, weight updates can shrink (vanish) or grow uncontrollably (explode), making training unstable. - Local Minima

Models can get “stuck” in suboptimal weight configurations instead of reaching the global best solution. - Overfitting

Over-adjusted weights may cause the model to memorize training data, harming its ability to generalize. - Computational Demand

Large-scale AI models (like GPT-style LLMs) contain billions of weights, requiring enormous processing power and energy to optimize.

Techniques to Optimize Weights

Researchers and practitioners use a variety of strategies to manage weights effectively:

- Adaptive Learning Rates

Optimizers like Adam, RMSProp, and AdaGrad dynamically adjust learning rates to balance speed and accuracy in weight updates. - Regularization

Techniques such as L1/L2 regularization penalize extreme weight values, reducing overfitting. - Batch Normalization

By normalizing inputs within layers, networks maintain stable weight distributions, improving training stability. - Smart Weight Initialization

Methods like Xavier or He initialization ensure weights start at balanced values, avoiding early instability. - Pruning and Quantization

- Pruning removes unimportant weights to reduce model size.

- Quantization compresses weights into smaller numerical formats, making AI models faster and more energy-efficient without major accuracy loss.

Real-World Applications of AI Weights

Understanding and optimizing weights enables breakthroughs across industries:

- Healthcare: Fine-tuned weights help detect tumors in medical imaging.

- Finance: Weights determine risk factors in credit scoring models.

- Natural Language Processing (NLP): Word embeddings and transformer models rely heavily on optimized weight matrices.

- Autonomous Vehicles: Weights balance multiple inputs (cameras, sensors, radar) to make split-second driving decisions.

Conclusion

AI weights are the silent yet powerful forces that guide machine learning models. They dictate how data flows through networks, how predictions are formed, and how reliable those predictions are. By carefully adjusting and optimizing these weights, researchers can build AI systems that are accurate, efficient, and robust enough to handle real-world complexity.

As AI models grow in scale and sophistication, the science of weight optimization will only become more important. From powering healthcare breakthroughs to enabling natural conversations with machines, AI weights remain the backbone of intelligence in the digital age.

Some YouTube Videos to help explain AI Weights

Are Language Models Just Weights? New AI Research Explained

YouTube Channel: Richard Aragon

Jack Morris: Stuffing Context is not Memory, Updating Weights is

YouTube Channel: AI Engineer

Olmo3 Is What ‘Open Weights’ Was Supposed to Mean

YouTube Channel: Better Stack

What are Weights in AI? A Simple Explanation | AI Nuggets

YouTube Channel: AiSecurityDIR

This Tiny 1-Bit Model Could Change AI Forever

YouTube Channel: Better Stack

🔴 Weights and Biases in Neural Networks Explained in JUST 5 Minutes!

YouTube Channel: Analytics Camp

Microsoft Accidentally Created the Most Efficient AI Ever

YouTube Channel: AI Revolution

How Open Weights Are Democratizing AI: Inside the Open-Source Revolution

YouTube Channel: LifeOnAutomate

Latest AI What Is Articles

AI Questions and Answers section for What are AI Weights: The Backbone of Machine Learning Models

Welcome to a new feature where you can interact with our AI called Jeannie. You can ask her anything relating to this article. If this feature is available, you should see a small genie lamp above this text. Click on the lamp to start a chat or view the following questions that Jeannie has answered relating to What are AI Weights: The Backbone of Machine Learning Models.

Visitor asked: please explain in more detail what a Neural Network Activation weight is

Jeannie’s answer to the question: In machine learning and particularly in deep learning, weights play an integral role in modelling the significance of input features relative to the output. Specifically, in the context of a neural network, AI weights - more commonly referred to as "weights" - learn and decide how much influence or importance a particular input feature should have on the final output or prediction. These weights are used in the computation of every node in the hidden layers of a neural network. They influence the value that a neuron passes forward in the network during the activation function phase. Essentially, each input to a neuron is multiplied by a corresponding weight, which determines the contribution of the input. The result is then passed through an activation function, such as the ReLU or Sigmoid, that maps the input to the output. During the AI model training phase, these weights are fine-tuned through a process called backpropagation that uses techniques such as gradient descent to iteratively improve the model by reducing the error difference between predicted and actual outputs. The optimal weight values can drastically improve the accuracy and performance of AI models. Therefore, understanding and effectively adjusting neural network weights is a critical part of developing and training robust machine learning models.

Date: 2025-02-09

Visitor asked: In model complexity what are classed as excessive weights

Jeannie’s answer to the question: Excessive weights in model complexity are the weights in a machine learning or deep learning model that contribute to overfitting. These are weights that have been adjusted too specifically to the training data during the AI model training process. This extreme fine tuning can cause the model to perform poorly on unseen data because it's too intricately fit to the patterns and noise in the training set. Therefore, these excessive weights make the model less generalized, hindering its learning and predictive capabilities on new data. This is a common challenge when training complex models like deep neural networks. It's important to strike a balance in training to avoid weights that are too excessive or too limited, as both can negatively impact the model's performance.

Date: 2025-02-09

Visitor asked: Please elaborate on Techniques like L1/L2 regularization to optimize weights

Jeannie’s answer to the question: In machine learning models, particularly neural networks utilized in deep learning, AI weights play a critical role. They are numerical parameters that help the model in making predictions by strengthening or weakening the influence of input variables. The optimization of these weights is critical to the development of a strong and effective AI model. A key method for doing this involves using techniques such as L1 and L2 regularization. L1 and L2 are regularization techniques that add a penalty equivalent to the absolute value of the magnitude of coefficients (L1, also called Lasso regression) or the square of the magnitude of coefficients (L2, also called Ridge regression) to the loss function. This penalty, also known as a regularization term, discourages learning a more complex or flexible model, so as to avoid the risk of overfitting. L1 regularization has the propensity to shrink some parameters to zero, effectively excluding certain features entirely. This is especially useful when you suspect that only a subset of your features are actually influential. On the other hand, L2 regularization doesn't push for sparse solutions as much as L1 does, but rather promotes smaller and more distributed weight values. Both regularization techniques are used to prevent overfitting, which is a crucial challenge in machine learning. Overfitting happens when a model learns the training data too well, capturing noise along with inherent relationships. This typically results in poor performance on unseen, test data. By incorporating these techniques into AI model training, developers are able to create models that generalize better to unseen data and are thus more useful in practical AI applications.

Date: 2025-02-12

Type: Article -> Category: AI What Is