Type: Article -> Category: AI What Is

What are AI Performance Metrics?

A Comprehensive Guide to Accuracy, Precision, Recall, F1 Score, and Confusion Matrix

Publish Date: Last Updated: 13th January 2026

Author: nick smith- With the help of GROK3

AI performance metrics are critical tools for evaluating the effectiveness of machine learning models. Metrics such as Accuracy, Precision, Recall, F1 Score, and the Confusion Matrix provide insights into how well a model performs, particularly in tasks like classification, regression, and diagnosis. This article explores these metrics in detail, their historical development, their importance in AI, and emerging trends shaping their future. Whether you're a data scientist, AI enthusiast, or business leader, understanding these metrics is essential for building reliable and robust AI systems.

AI Performance Metrics

AI performance metrics quantify the performance of machine learning models by comparing their predictions to actual outcomes. These metrics are particularly vital in supervised learning tasks, such as classification (e.g., spam detection, medical diagnosis) and regression (e.g., predicting house prices). They help practitioners identify strengths and weaknesses in models, optimize algorithms, and ensure systems meet real-world requirements.

Popular metrics like Accuracy, Precision, Recall, F1 Score, and the Confusion Matrix are widely used because they provide a standardized way to assess model performance across domains, from healthcare to finance to autonomous vehicles.

Historical Context of AI Performance Metrics

The development of AI performance metrics is rooted in the evolution of statistics and machine learning. In the mid-20th century, statistical measures like Accuracy and Error Rate were used to evaluate early predictive models. The introduction of the Confusion Matrix in the 1970s formalized the evaluation of classification tasks, enabling researchers to break down predictions into true positives, true negatives, false positives, and false negatives.

The 1980s and 1990s saw the rise of Precision and Recall as critical metrics for information retrieval systems, such as search engines and document classification tools. These metrics addressed the limitations of Accuracy, particularly in imbalanced datasets where one class dominates. The F1 Score, introduced as a harmonic mean of Precision and Recall, gained prominence in the early 2000s as machine learning expanded into domains like natural language processing (NLP) and medical diagnostics.

The 2010s marked a turning point with the advent of deep learning, which necessitated robust evaluation frameworks for complex models. The Confusion Matrix became a staple for visualizing performance, while metrics like Area Under the ROC Curve (AUC-ROC) and Log Loss emerged to complement traditional measures. Today, AI performance metrics are integral to benchmarking state-of-the-art models in competitions like Kaggle and real-world applications.

Key AI Performance Metrics Explained

Below, we dive into the core metrics—Accuracy, Precision, Recall, F1 Score, and Confusion Matrix—explaining their definitions, formulas, use cases, and limitations.

1. Accuracy

Definition: Accuracy measures the proportion of correct predictions made by a model out of all predictions.

Formula: [ \text{Accuracy} = \frac{\text{True Positives (TP)} + \text{True Negatives (TN)}}{\text{TP} + \text{TN} + \text{False Positives (FP)} + \text{False Negatives (FN)}} ]

Use Case: Accuracy is ideal for balanced datasets, such as classifying emails as spam or not spam, where the classes are roughly equal in size.

Limitations: Accuracy can be misleading in imbalanced datasets. For example, in medical diagnosis, where only 1% of patients have a disease, a model that predicts "no disease" for everyone could achieve 99% Accuracy but fail to identify any actual cases.

2. Precision

Definition: Precision measures the proportion of true positive predictions out of all positive predictions made by the model.

Formula: [ \text{Precision} = \frac{\text{TP}}{\text{TP} + \text{FP}} ]

Use Case: Precision is crucial in scenarios where false positives are costly, such as spam detection (misclassifying a legitimate email as spam) or fraud detection.

Limitations: High Precision may come at the expense of Recall, as a model may become overly conservative in making positive predictions.

3. Recall (Sensitivity or True Positive Rate)

Definition: Recall measures the proportion of true positives identified out of all actual positive cases.

Formula: [ \text{Recall} = \frac{\text{TP}}{\text{TP} + \text{FN}} ]

Use Case: Recall is vital in applications where missing a positive case is critical, such as medical diagnoses (e.g., detecting cancer) or search engines (retrieving relevant documents).

Limitations: Maximizing Recall can increase false positives, reducing Precision.

4. F1 Score

Definition: The F1 Score is the harmonic mean of Precision and Recall, providing a single metric that balances both.

Formula: [ \text{F1 Score} = 2 \times \frac{\text{Precision} \times \text{Recall}}{\text{Precision} + \text{Recall}} ]

Use Case: The F1 Score is widely used in imbalanced datasets, such as text classification or anomaly detection, where both Precision and Recall are important.

Limitations: The F1 Score assumes equal importance of Precision and Recall, which may not align with all use cases (e.g., prioritizing Recall in medical diagnostics).

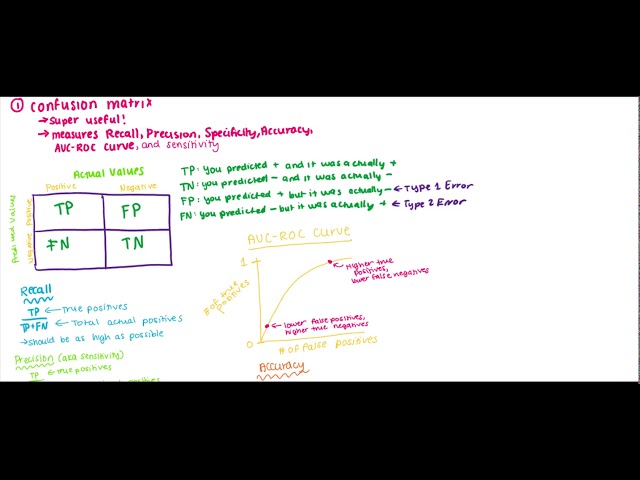

5. Confusion Matrix

Definition: A Confusion Matrix is a tabular representation of a model’s predictions versus actual outcomes, showing TP, TN, FP, and FN.

Structure:

|

|

Predicted Positive |

Predicted Negative |

|---|---|---|

|

Actual Positive |

True Positive (TP) |

False Negative (FN) |

|

Actual Negative |

False Positive (FP) |

True Negative (TN) |

Use Case: The Confusion Matrix is used across domains to visualize model performance, particularly in multi-class classification tasks like image recognition or sentiment analysis.

Limitations: While powerful, the Confusion Matrix can become complex for multi-class problems, requiring additional metrics like macro- or micro-averaging.

Importance of AI Performance Metrics

AI performance metrics are indispensable for several reasons:

-

Model Evaluation: Metrics provide a standardized way to compare models, ensuring the best-performing one is selected for deployment.

-

Real-World Impact: In fields like healthcare, high Recall can save lives by identifying diseases early, while high Precision reduces unnecessary treatments.

-

Bias Detection: Metrics like the Confusion Matrix can reveal biases in predictions, such as disproportionate false positives for certain groups.

-

Business Decisions: Metrics guide resource allocation, helping organizations prioritize models that align with their goals (e.g., minimizing false positives in fraud detection).

-

Regulatory Compliance: In regulated industries like finance and healthcare, metrics ensure models meet legal and ethical standards.

Popular Keywords in AI Performance Metrics

The field of AI performance metrics is dynamic, with certain keywords trending due to their relevance in research and industry. These include:

-

Imbalanced Datasets: Addressing skewed class distributions using metrics like F1 Score and AUC-ROC.

-

Explainability: Combining metrics with interpretable models to meet ethical AI standards.

-

Multi-Class Classification: Extending metrics like the Confusion Matrix to handle complex tasks.

-

Real-Time Evaluation: Metrics for streaming data, such as in autonomous vehicles.

-

Fairness and Bias: Metrics to detect and mitigate bias in AI predictions.

-

Automated Machine Learning (AutoML): Using metrics to automate model selection and hyperparameter tuning.

The Future of AI Performance Metrics

The landscape of AI performance metrics is evolving rapidly, driven by advancements in AI and growing demands for ethical, transparent, and robust systems. Key trends include:

-

Domain-Specific Metrics: Industries like healthcare and autonomous driving are developing bespoke metrics tailored to their needs, such as time-to-detection in diagnostics or safety scores in self-driving cars.

-

Fairness and Equity Metrics: New metrics are emerging to quantify bias and ensure equitable outcomes, particularly in sensitive applications like hiring or criminal justice.

-

Real-Time Metrics: As AI systems operate in dynamic environments (e.g., IoT devices), metrics for real-time performance monitoring are gaining traction.

-

Explainable AI (XAI): Metrics are being paired with explainability frameworks to provide insights into model decisions, enhancing trust and adoption.

-

Ensemble Metrics: With the rise of ensemble models and federated learning, metrics that evaluate combined predictions across multiple models are becoming critical.

-

Sustainability Metrics: As AI’s environmental impact grows, metrics assessing computational efficiency and energy consumption are emerging.

Watch this tutorial as a video.

Demystifying AI Performance Metrics: Accuracy, Precision, Recall, F1 Score, Confusion Matrix Explai.

YouTube Channel: aifun

Performance Metrics on YouTube

What are Large Language Model (LLM) Benchmarks?

YouTube Channel: IBM Technology

RAGAS: How to Evaluate a RAG Application Like a Pro for Beginners

YouTube Channel: Mervin Praison

LLM Evaluation Basics: Datasets & Metrics

YouTube Channel: Generative AI at MIT

Performance Metrics for AI Research!

YouTube Channel: AInspire Team

Conclusion

AI performance metrics like Accuracy, Precision, Recall, F1 Score, and the Confusion Matrix are the backbone of machine learning evaluation. Their historical evolution reflects the growing complexity of AI systems, from simple statistical models to deep learning behemoths. These metrics are crucial for ensuring models are accurate, reliable, and fair, particularly in high-stakes domains like medical diagnosis and autonomous systems.

As AI continues to advance, performance metrics will evolve to address new challenges, such as real-time evaluation, fairness, and sustainability. By staying informed about these metrics and their applications, data scientists and organizations can build AI systems that are not only powerful but also ethical and impactful.

Keywords: AI performance metrics, Accuracy, Precision, Recall, F1 Score, Confusion Matrix, machine learning evaluation, imbalanced datasets, explainable AI, fairness in AI, real-time metrics.

Latest AI What Is Articles

AI Questions and Answers section for What Are AI Performance Metrics?

Welcome to a new feature where you can interact with our AI called Jeannie. You can ask her anything relating to this article. If this feature is available, you should see a small genie lamp above this text. Click on the lamp to start a chat or view the following questions that Jeannie has answered relating to What Are AI Performance Metrics?.

Visitor asked: how are companies improving ai algorithm

Jeannie’s answer to the question: The question relates to the topic as it discusses the improvement of AI algorithms which could potentially involve the use of AI performance metrics. Companies are enhancing the performance and accuracy of AI algorithms by utilizing AI metrics such as accuracy, precision, recall, and the F1 score. 1. Accuracy: By maximizing this metric, companies make sure their AI model makes the correct predictions most of the time. 2. Precision: It focuses on minimizing false positives. When a high precision is critical for the application, companies tune their AI algorithms to prioritize precision. 3. Recall: It's about minimizing false negatives. Companies focus on this aspect when they want to capture as many positive instances as possible. 4. F1 score: This metric provides a balance between precision and recall. Companies opt for this when they want to maintain equilibrium between both precision and recall. In this way, using these metrics, companies fine-tune their AI algorithms to increase effectiveness and efficiency. Also, they continue to feed the AI algorithm with more and more data to improve its learning capabilities, hence enhancing its performance.

Date: 2025-04-20

Type: Article -> Category: AI What Is